By the Acrolyze Team | 4 min read

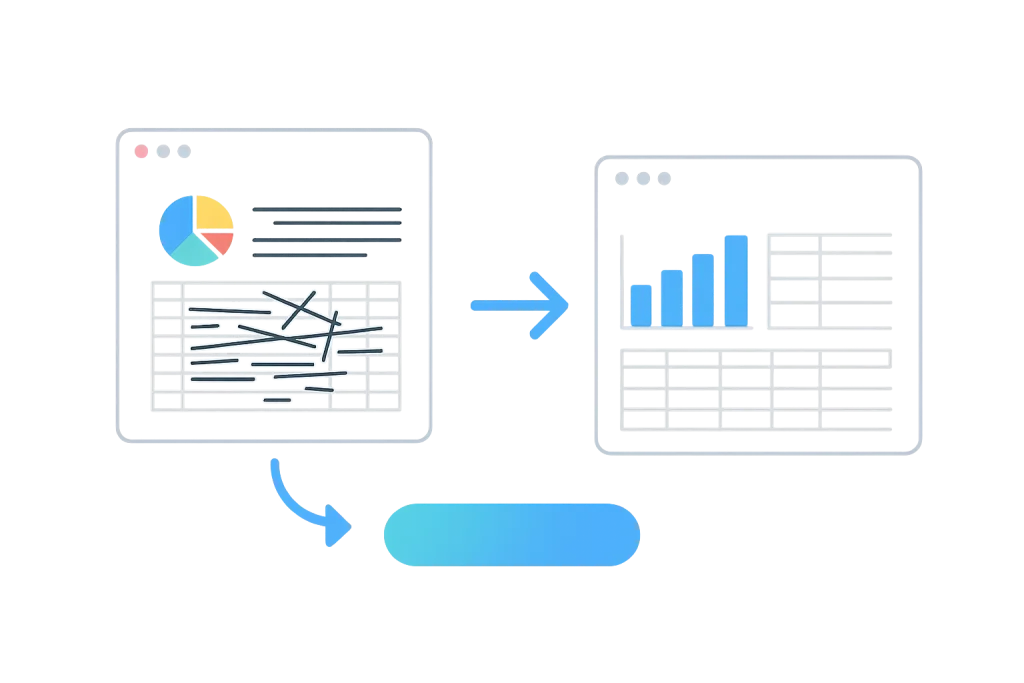

Data cleaning typically consumes about 60-80% of any data project timeline. While this step is crucial for accurate analysis, there are several proven methods to make the process more efficient without compromising quality.

Understanding Your Data First

Before making any changes, spend 10 minutes examining your dataset structure. Look at column types, missing values, and obvious inconsistencies. This upfront investment prevents hours of unnecessary work later.

Most spreadsheet and database tools provide summary statistics. Use these features to identify patterns rather than scanning row by row. For example, if you notice that 90% of your “errors” follow a specific format, you can address them in bulk rather than individually.

Standardize Common Formats

Many data issues repeat across datasets. Phone numbers, dates, and addresses often need similar corrections. Create a standard approach for these common problems.

Keep a reference sheet of your most frequent fixes. When you encounter similar issues in future projects, you’ll have tested solutions ready to implement. This approach works particularly well for:

- Date format conversions

- Phone number formatting

- Address standardization

- Name capitalization

Focus on High-Impact Issues

Not every data problem needs immediate attention. Prioritize issues that affect large portions of your dataset or critical analysis fields. A formatting inconsistency in 10 rows may not warrant the same attention as missing values in a key column affecting 1,000 records.

Consider the business impact of each issue. Problems that could lead to incorrect conclusions deserve priority over cosmetic inconsistencies that don’t affect your analysis outcomes.

Use Sampling for Large Datasets

When working with very large datasets, consider cleaning a representative sample first. This approach helps you understand the data quality landscape without processing millions of records upfront.

Choose your sample carefully to represent the full dataset’s characteristics. Document your sampling method so others can understand your approach. This technique works well for initial data exploration and proof-of-concept work.

Implement Quality Checkpoints

Build verification steps into your cleaning process. After each major change, check row counts, verify key statistics, and spot-check random samples. These quick validations catch errors early, preventing time-consuming fixes later.

Create a simple checklist of critical data quality measures for your specific use case. Run through this checklist at logical stopping points rather than waiting until the end of your cleaning process.

Measuring Your Progress

Track the time you spend on different types of cleaning tasks. This information helps you identify which areas benefit most from automation or process improvements. Many analysts discover that developing better upfront strategies saves more time than optimizing individual cleaning steps.

Getting Started

Choose one technique that addresses your biggest current challenge. Implement it consistently for two weeks, then evaluate the time savings. Small improvements in data cleaning efficiency compound quickly across multiple projects.

Focus on building repeatable processes rather than perfect one-time solutions. The goal is reliable, efficient cleaning that consistently produces analysis-ready data.

Want more data analysis insights? Follow Acrolyze for practical tips that help streamline your data workflows.